Reasoning Pose-aware Placements with Semantic Labels - Brandname-based Affordance Prediction and Cooperative Dual-Arm Active Manipulation More Information

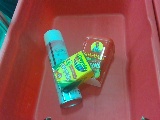

The Amazon Picking/Robotics Challenges showed significant progress in object picking from a cluttered scene, yet object placing remains challenging. Pose-aware placing based on human and machine readable pieces on an object is useful. For example, the brandname of an object placed on a shelf should be facing the human customers. Similarly, the barcode of an object placed on a conveyer should be facing a machine scanner. There are robotic vision challenges in the object placing task: a) the semantics and geometry of the object to be placed need to be analysed jointly; b) and the occlusions among objects in a cluttered scene could make it hard for proper understanding and manipulation. To overcome these challenges, we develop a pose-aware placing system by spotting the semantic labels (e.g., brandnames) of objects in a cluttered tote, and then carrying out a sequence of actions to place the objects on a shelf or a conveyor with desired poses. Our major contributions include 1) providing an open benchmark dataset of objects, brandnames, and barcodes with multi-view segmentation for training and evaluations; 2) carrying out comprehensive evaluations for our brandname-based fully convolutional network (FCN) that can predict affordance and grasp to achieve pose-aware placing, whose success rates decrease along with clutters; 3) showing that active manipulation with two cooperative manipulators and grippers can effectively handle occlusions of brandnames. We analyzed the success rates and discussed the failure cases to provide insights for future applications.

Development

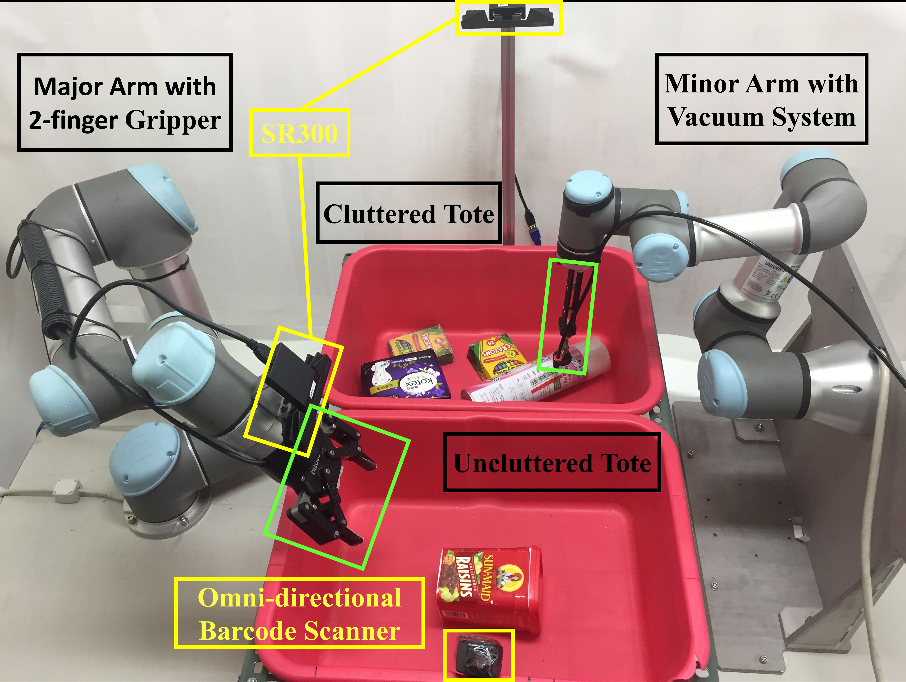

Robot System

Collaborative robotic system: we propose a dual-arm cooperative system to actively manipulate the scene to improve perception for placing. The gripper arm moves an occluding object to reveal information (the invisible brandname) hidden underneath, and the two-finger arm further predicts grasp using the brandname and completes pose-aware placing.

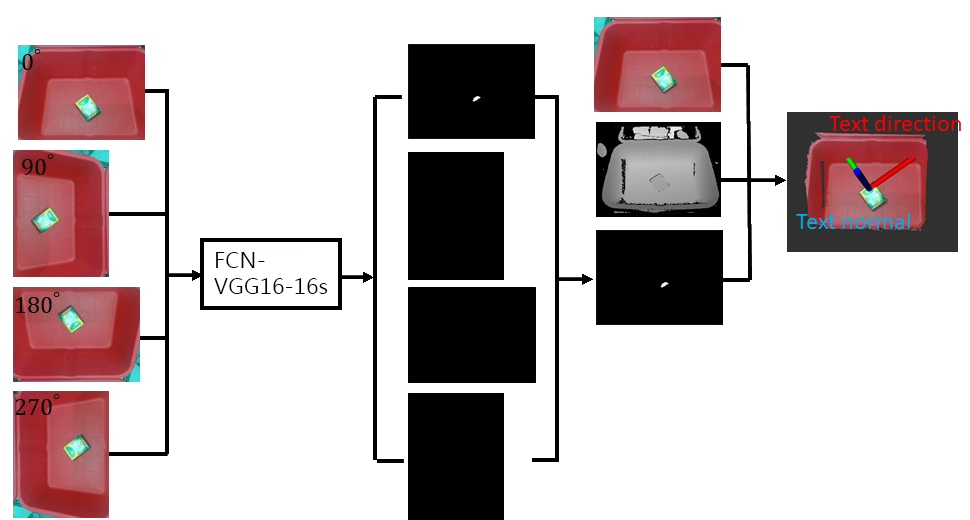

Brandname Prediction

In real scenario, the text angle could be 0 360 degree. To make the vision system detect text as much as possible without moving the robot arm. The image from SR300 is rotated at 0, 90, 180, 270, and then converted to gray images which are used as inputs as FCN model. Then each connected segmented text is bounded by a rotated box. Finally, these prediction results are rotated and combined as a final prediction mask which is used for 6D text pose estimation.

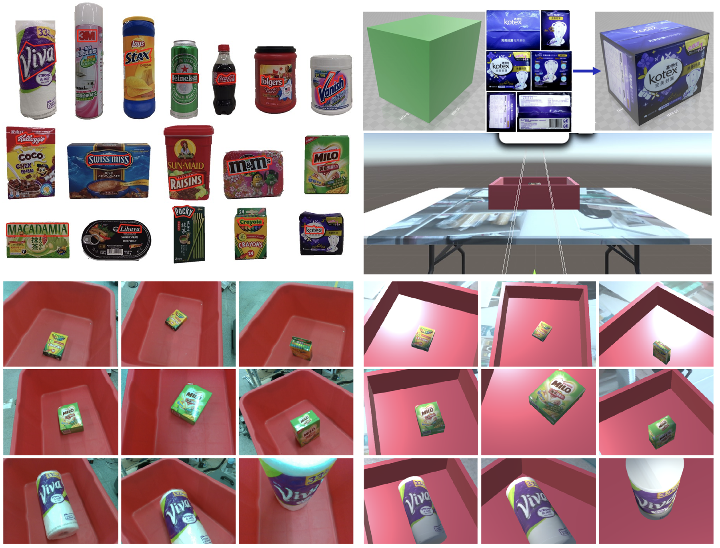

Virtual Data Generator

Data collection in virtual environment: Data collection of training data in real (left) and virtual (right) environments. Upper-left: Samples of objects and their brandnames (red polygons). Top-right: creating an object model via the 3D builder in Unity. Each scene contains only one object, given the findings that batch-training with a single object could yield deep models that perform good inference results on scenes with multiple objects.

It will be released soon.

Our paper references three datasets (all of them available for download):

• Real Training Dataset for brandname and object segmentation

• Virtual Training Dataset for brandname and object segmentation

• Benchmark Dataset for brandname and object segmentation

Our training dataset has two collections:

1) the one from real environment and robot includes image variences of the illumination changes, object reflectiveness, and different tint. 2) An virtual environment similar to the

real environment settings for comparisons.

In terms of file structure, the RGB-D camera sequence for each scene is saved into a corresponding folder by its name (‘scene_000000’, ‘scene_000001’, etc.).

Real Brandname and Object Segmentation Dataset Download: real_data.tar.gz (4 GB)

We select 20 products in our dataset, and the selection is based on the following criteria: 1) the physical height of brandname region is larger than 1.5 centimeters, 2) single line brandname, and 3) the brandname instance only occurs once on the object. There are 46 scenes × 31 views × 20 objects, resulting in 28,520 images. Among them, there are 26 scenes, total 16,120 images, including brandname label. The folder contents are as follows:

img/(object_name)

• XXXXXX.jpg - a 24-bit JPEG RGB color image captured from the Realsense camera.

mask/(object_name)

• XXXXXX.png - 8-bit PNG image of ground truth brandname and object segmentation labels using the annotation tool LabelMe. The pixel would be 255 if it is a object, 0 if not.

Virtual Brandname Segmentation Dataset Download: virtual_brandname.tar.gz (6 GB)

1) Objects: For object-oriented dataset, due to the differ- ent shape of the object, there are 3 kinds of placing strategy and number of scene. The dataset have cylinder (8 objects): 116 scenes, cuboid (10 bjects): 108 scenes, rounded cuboid (2 objects): 72 scenes, totally 2152 scenes × 15 views × 4 modes of adding noise, resulting in 129,120 images.

2) Brandnames: The brandname-oriented dataset has 20 objects × 10 scenes × 54 views × 4 modes of adding noise, resulting in 43,200 images. Among them, there are 24,624 images including brandname label and 16,508 images including barcode label.

(object_name)/Scene

• X_original.png - a 24-bit PNG RGB color image captured from unity's virtual camera.

• X_label.png - 8-bit PNG image of ground truth brandname and barcode segmentation labels. The pixel would be 255 in value if it is brandname, and barcode in 128.

• X_seg.png - a 24-bit PNG RGB color image regarded as ground truth object segmentation labels. The pixel would be red if it is an object, green if it is tote, blue if it is out of tote (background).

Benchmark Brandname and Object Segmentation Dataset Download: benchmark (203 MB)

Benchmark Brandname and Object Segmentation Experiment Record Template Download: Record (162.3 kB)

There are six kinds of benchmark dataset for object and brandname separately, and all of them are seen from SR300 camera’s viewpoints during experiment. All the scenes were captured in our lab environment (at NCTU), with strong overhead directional lights. In total, there are 1010 images with manually annotated ground truth segmentation labels.

Each scene (in addition to the files described here), contains:

• img/X_obj/scene-XXXXXX - a 24-bit JPEG RGB color image captured from the Realsense camera.

• mask/X_obj/scene-XXXXXX - 8-bit PNG image of ground truth object segmentation labels using the annotation tool LabelMe. Divide each pixel value by 12 to obtain label range [0,21].

Department of Electrical and Computer Engineering, NCTU, Team Lead

Department of Electrical and Computer Engineering, NCTU

Department of Electrical and Computer Engineering, NCTU

Department of Electrical and Computer Engineering, NCTU

Department of Electrical and Computer Engineering, NCTU

Department of Computer Science, University of Massachusetts at Boston, USA

Department of Electrical and Computer Engineering, NCTU

Mitsubishi Electric Research Laboratories, Cambridge, MA, USA

Delta Research Center, Taiwan

Department of Computer Science, George Mason University

Department of Electrical and Computer Engineering, NCTU

If you are interested, please visit our ARG-NCTU website or contact us with following information.

(EE627)1001 University Road, Hsinchu,

Taiwan 30010, ROC

Yung-Shan Su

michael1994822@gmail.com